Are you looking for information in tons of documents but struggle to find it? I have the same problem. So I started a literature search and setup a Data Science project on Semantic Search. To verify i’m heading in the right direction I did a POC on finding interesting phrases in the Holy Bible. This blog post is the first in a series, describes the outlines of the project and show the first result.

Why is finding information so important

There is no shortage in data but a lot of valuable information is just hard to find. Easy access to relevant information is crucial for people and organisations. There are many use cases of information retrieval in application domains such as Business, Legal, Environmental or Crime fighting.

A use case: I found an interesting use case in the Caselaw Access Project. The Caselaw Access Project (CAP), maintained by the Harvard Law School Library Innovation Lab, includes “all official, book-published United States case law and made it available on the internet. This is a important first step in making this information available to lawyers. Now the data is available researchers can use this data for advanced text analysis and help lawyers to find relevant documents for their clients.

Organisations need solutions for automatic collection and classification of documents. We want easy to use tools for querying documents in our own language to find the answer to our questions.

Goal of the project

The goal is: “Find solutions that help to find relevant text in documents”

I once had a quarel with my insurance on a damage claim and needed to find some legal arguments to support my case. Im not a lawyer so it took me a lot of time to find what i needed. Lucky for me I won the argument and it saved me a lot of money. But it made me think.

“Is there some solution to the problem of finding answers in a large set of documents when you do not fully understand the terminology?”

I started this project because i’m very interested in extracting knowledge from text and the technology that helps us with this such as Natural Language Processing and Machine Learning.

My secondary goal is: “Learning exiciting technology that will help me to expand my carreer in new and glorious directions”

- I am a programmer with a lot of experience in data processing (ETL, WebServices)

- I love data science and started this project in my own time

- I have worked with Natural Language Processing and Python

- I love to learn more about Machine Learning

How can Machine learning help us to find things

Why is it so difficult to find information? First information is hidden in unstructured data sources such as documents, websites and emails and needs to be collected and stored in some central repository for querying. Then you need to specify a search query and use the exact terminology as used in the documents. It gets even harder if documents are written in multiple languages, use terminology by different authors with different writing styles.

Semantic Search is a solution that can help to solve this problem and its based on Natural Language Processing and Machine Learning. So we need to know more about how to apply this technology to our problem.

Approach

I started collecting interesting articles and read all I could find about the subject. Then try to find solutions on the internet (open source or commercial products). Search engines such as ElasticSearch and SOLR and their commercial equalivents (LucidWorks) will also be considered. At the end I hope to have gained insight in the problem, found some solutions and learned a lot.

Experiment in Colab: To get a better understanding I do some experiments by myself. For my experiments I’m developing data pre-processing processes and machine learning models in Google Colab. Colab is a hosted Data Science work environment that allows me to store all my data and execute Python scripts for data analysis and visualisation.

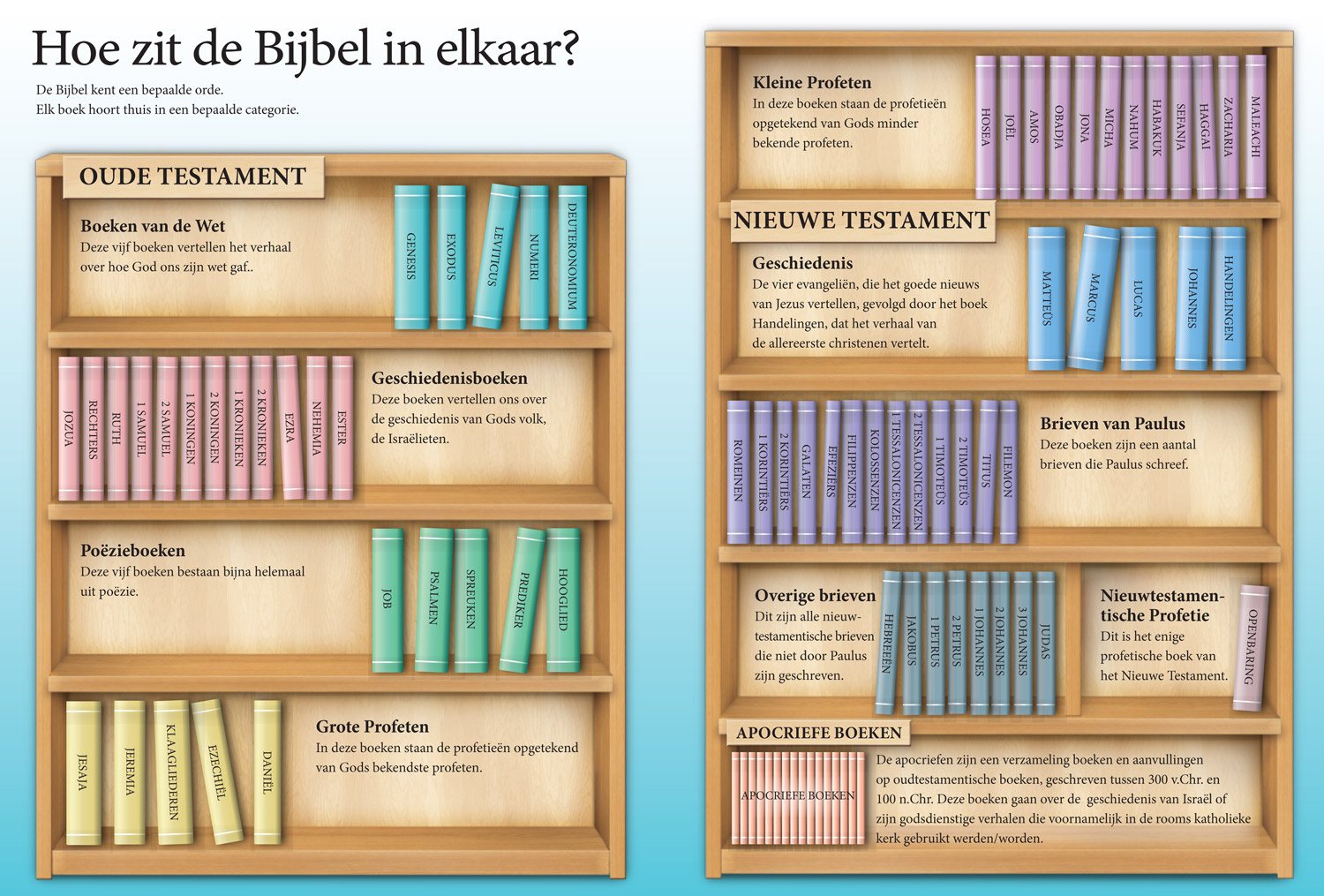

Analyzing the Holy Bible: To test and evaluate my results I need access to a set of documents that covers a limited set of subjects, is well written en has some degree of structure. I therefore choose to use the bible as a research subject because it is a reasonable structured knowledge domain with well defined boundaries and text is available in multiple languages.

A first result: Here is an example of me querying the bible using a multi lingual Universal Sentence Encoder:

The query in this example is in English and matched with a set of documents (bible phrases) written in Dutch. Under the hood all documents where transformed into a Dense Vector presentation. The document vectors (144000) where stored in an index. Then at query time the query string is also converted into a Dense Vector and a fast search algorithm (Faiss) retrieves the top ten matches from the index.

This is an simple example how Machine Learning can be used as a search tool. Now I have to find out how well this works and under what conditions. Compare this techology to other solutions, find out how to scale up and transform it into a working solution thats ready for production.

Semantic search is just one application of machine learning. There are many other that i have mentioned in my “Project deliverables” list.

Next steps:

- Define a formal evaluation process

- Collect data for evaluation data sets (semantic similar sentences in parallel translations, biblical cross references, bible topics)

- Train some models on these datasets

- Evaluate the results

Im now working on this and in a next post I hope to show some findings.

Technology in this project: Python, NumPy, Spacy, NLTK, scikit-learn, Tensorflow, TF/IDF, Gensim Doc2Vec, BERT, Universal Sentence Encoder, etcetera.

I hope this project will let me find what im looking for…..

Project deliverables

I have set myself some goals, that I hope to fullfill. But i have to be realistic some may be too difficult or consume too much time.

- Practical applications

- Find relevant documents in a large set with queries in human language

- Extract metadata from documents in order to get an overview

- Text summarisation

- Classification

- Topic modeling

- Keyword extraction

- Enhanced features

- Find cross references within and across documents

- Extract factual data: people, places, locations, dates and activities (named entities)

- Develop methods and processes

- Collecting documents (webscraping, scanning)

- Preprocessing documents (cleaning, organizing, tagging)

- Fine tune pretrained model to provide better support for a specific domain language, terminology (test on Dutch bible text corpus)

- Challenges

- Supporting Multiple languages with a single solution (English, Dutch, German …)

- Supporting domain specific terminology

References:

- Caselaw Access Project: https://lil.law.harvard.edu/projects/caselaw-access-project/

- Free Course: Data Science Foundations https://cognitiveclass.ai/learn/data-science